The Repost #01: May 2025

Welcome to The Repost, a monthly round-up of what's happening in the world of fact checking and misinformation. We'll be bringing you the news, research and resources you need to make sense of our increasingly messy digital world.

This newsletter is produced by the RMIT Information Integrity Hub, an initiative to combat harmful misinformation by empowering people to be critical media consumers and producers.

In our first edition, we explore the role of deepfakes in the 2025 federal election and bring you the results of our Election Promise Tracker. Plus, the Trump administration turns 100 (days old).

Has AI shifted the dial this election?

As Australians cast their ballots in the first federal poll since the arrival of ChatGPT, many have worried about powerful new artificial intelligence tools and the threat they pose to elections.

Researchers have warned that deepfakes could be used to spread online disinformation, while the Australian Electoral Commission has launched a campaign to educate voters about the risks of being misled by AI-generated content.

Digital deepfakes — or realistic but false depictions of people saying or doing things they did not do — have certainly played some role in elections overseas, including in Indonesia, where a former president endorsed his party from beyond the grave.

So, how has AI featured in the Australian election?

Deepfakes not the main game, yet

Lucas Whittaker, a lecturer in marketing at Swinburne University who studies the use of deepfakes, told The Repost he had seen an uptick in the political usage of AI during the 2025 campaign but fewer deepfakes than he expected.

Likewise, few examples have been turned up by the University of Melbourne's Hunt Laboratory for Intelligence and Security Studies, which has been running a crowdsourced misinformation-monitoring project during the election.

Ariel Kruger, a research fellow with the Hunt Lab, said only five per cent of the posts reviewed by volunteers were flagged as "altered media" — a category that includes deepfakes.

There have nonetheless been some notable examples, including an AI-generated audio clip of US podcaster Joe Rogan supposedly disparaging Minister for Foreign Affairs Penny Wong.

That clip was debunked by AAP FactCheck, whose fact checkers also took on two deepfake videos purporting to show Shadow Minister for Indigenous Australians Jacinta Nampijinpa Price kissing Opposition Leader Peter Dutton and then former radio host Alan Jones.

Deepfakes are also circulating on Chinese-language social media, with researchers from the RECapture project telling The Repost they had identified various deepfakes that were not flagged by the platforms.

Those examples included this video, shared by a Chinese-language media organisation, in which the voiceover for the presenter has been AI-generated.

In a separate case identified by the ABC in March, a deepfake video falsely portrayed Mr Dutton vowing — in Mandarin — to abolish the Aboriginal flag.

Easy to spot, but still a problem?

Arguably, some of the AI-generated images shared during this election campaign look realistic enough that some audiences might just believe their eyes.

But often, the manipulation is more obvious. Take, for example, images that liken Mr Dutton to Donald Trump or show Mr Albanese wearing drag.

Daniel Miller, a lecturer in psychology at James Cook University who is researching the human detection of deepfakes, told The Repost that deepfakes had been a mainstay of "online joke or meme culture" since their inception in 2017.

Like a lot of AI-generated political content, he said, "most deepfakes aren't necessarily aimed at tricking you but are instead meant to be humorous in a way that pushes a user to share the image on their feed".

Dr Miller said the purpose of misinformation and disinformation was often not to change people's minds but to further entrench pre-existing beliefs.

So, to take one recent example, a clearly fictional photo of Mr Albanese steering an asylum seeker boat might still serve to reinforce preconceptions that Labor is weak on immigration or allowing non-citizens to vote in the federal election.

"I think the trend has been the generation of deepfake material within certain communities to reaffirm ideological positions, with not really many instances of high-profile ones aimed to deceive the general public," said Swinburne's Dr Whittaker.

But regardless of a creator's intent, AI-generated images can still inadvertently fool audiences — particularly when they agree with the political message being pushed.

Don't get fooled

Memes aside, it seems likely that the main use of AI in this campaign has been to improve "tried-and-tested influence tactics", such as creating sponsored posts that mimic authentic news content, clickbait headlines containing exaggerated claims, and posts featuring content designed to provoke a strong emotional response.

This would echo overseas findings that while AI made an appearance in US and European election campaigns, it did not meaningfully affect the results.

However, it's still worth knowing how to spot a deepfake.

Common signs include awkward hand positions, unnatural blinking, poor audio syncing, or mismatches between a person's facial expressions and the emotional tone of their speech.

Blurs and inconsistencies around a person's mouth or hands or between their head and body can be another flag, as can images or videos that look "too perfect".

If you do happen across content you suspect to be misleading but aren't sure, check out these free tools for detecting AI-generated photos and videos.

Election promises: Did Labor deliver?

At the end of its term, the Albanese government has delivered roughly 70 per cent of its key election pledges made during the 2022 campaign, according to our Election Promise Tracker.

As many readers would recall, the tracker is an online tool that tracks 66 commitments made by Labor ahead of the last election, providing an independent assessment of the government's performance.

Labor failed to deliver on 20 per cent of the promises being tracked, while 10 per cent were thwarted or remained either stalled or in progress at the time the election was called.

The promise tracker was launched by RMIT ABC Fact Check in 2023 and has continued as part of the RMIT Information Integrity Hub.

The results are essential reading heading into the 2025 election, and particularly handy if you're one of the many voters who can't recall what the government achieved over the last three years.

We've published a summary of the results in The Conversation, along with our tips on how to make sense of what's being promised during this year's campaign.

The full results are available on the RMIT promise tracker website.

The whip around

The Trump administration has cut misinformation research funding (MelissaMN - stock.adobe.com)

The Trump administration has cut misinformation research funding (MelissaMN - stock.adobe.com)

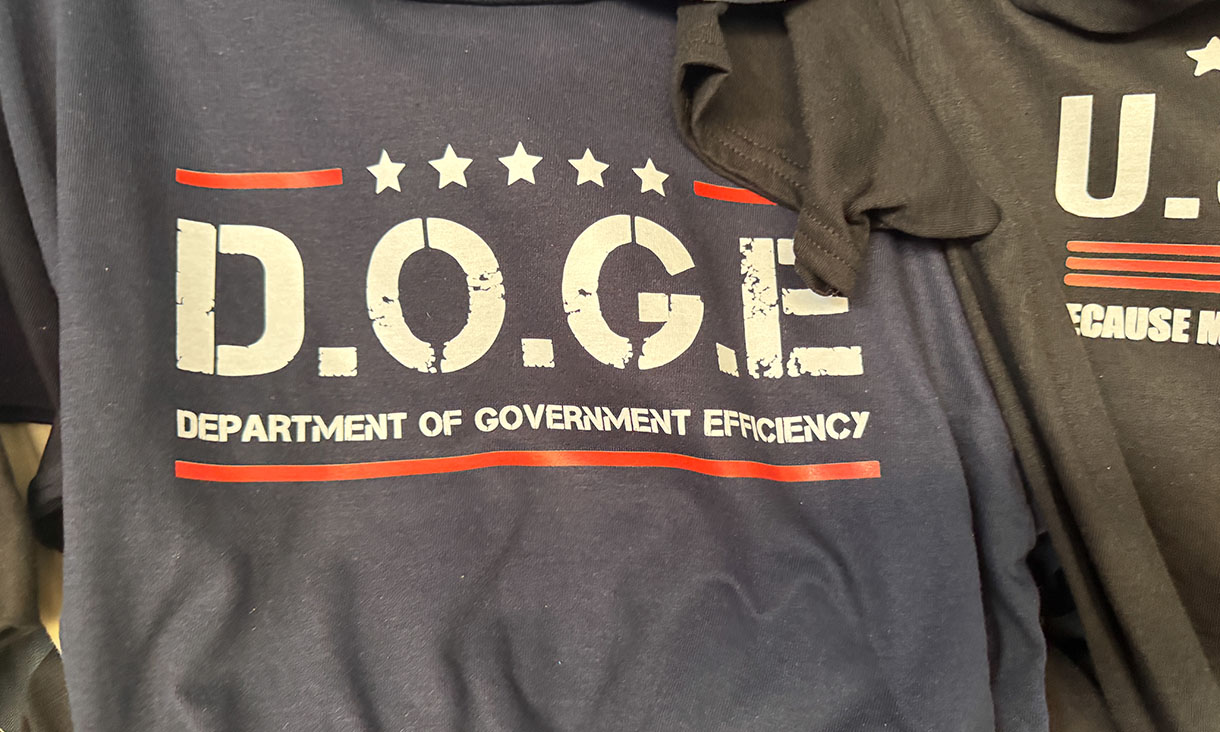

- This week marked 100 days of US President Donald Trump's second term, which began with a blizzard of executive orders. Unfortunately, many of his actions have been justified on the basis of "false, misleading and hyperbolic claims", the New York Times reports. According to fact checkers with NewsGuard, 20 false claims about Mr Trump, spread mostly by his supporters, attracted 134 million views on social media.

For its part, the White House claims that the first 100 days have been characterised by "a nonstop deluge of hoaxes and lies from Democrats and their allies in the Fake News suffering from terminal cases of Trump Derangement Syndrome".

- Also in the US, hundreds of misinformation researchers have had their funding slashed by the National Science Foundation on the basis that their work does not align with the government's priorities, Nieman Lab reports. The Department of Defense is also terminating its entire social science research program, including studies related to disinformation.

- The death of Pope Francis last week triggered a surge of misinformation, despite the late pontiff being no fan of falsehoods. On social media, some users have sought to rewrite his legacy with false claims that he had advocated for the euthanasia of people with disabilities or congratulated Vladimir Putin upon his re-election in 2024.

- The International Fact-Checking Network has released its annual report on the state of the global fact-checking industry. It makes for sombre reading, with many fact checkers facing harassment and increased financial uncertainty following Meta's decision to pull the plug on its US third-party fact-checking program.

- On a lighter note, academics from Queensland University of Technology and RMIT have rated the best and worst political memes of the federal election campaign. Cringe comedy fans should find plenty to enjoy.

- And as you head out to vote on Saturday, be sure to grab an election day snag. To avoid disappointment, visit the Democracy Sausage map, which tracks all the voting centres with sausage stands.