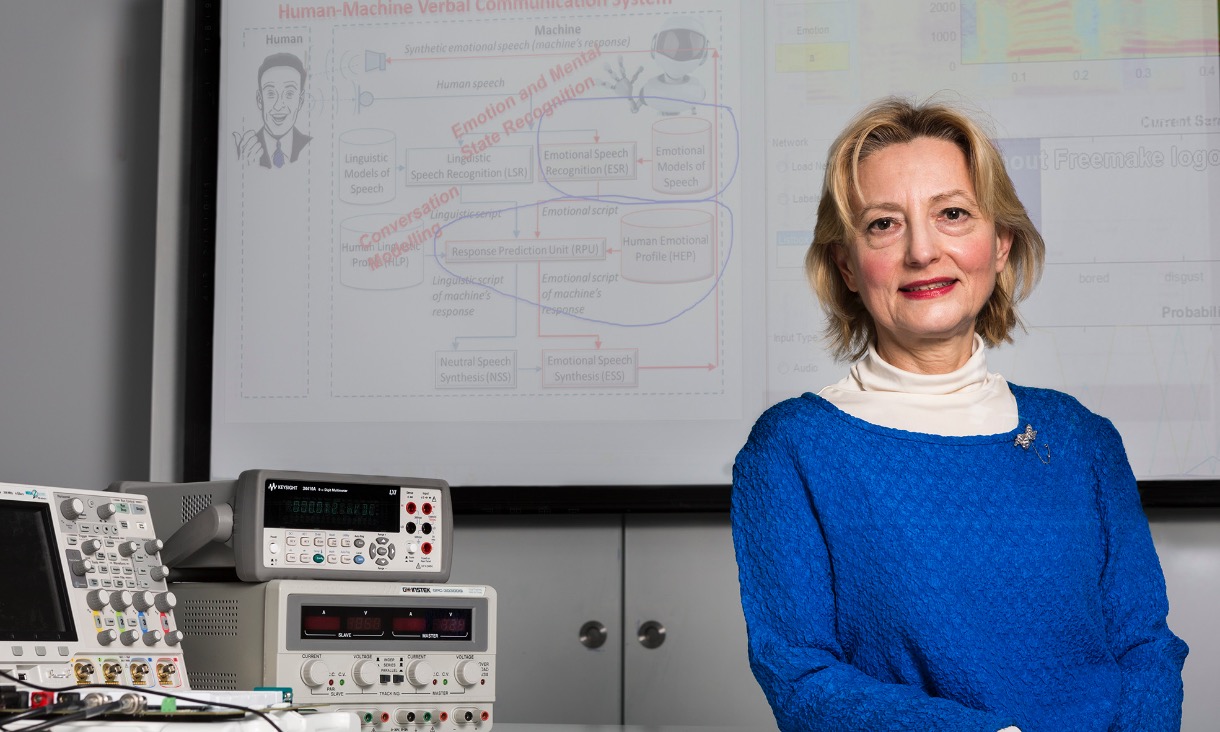

Study reveals the reasons women leave cyber security: bullying, 24/7 culture, pay gap

New research from RMIT University has investigated why women are under-represented in Australia’s cyber security workforce and why the few that do enter the sector, often end up leaving it.

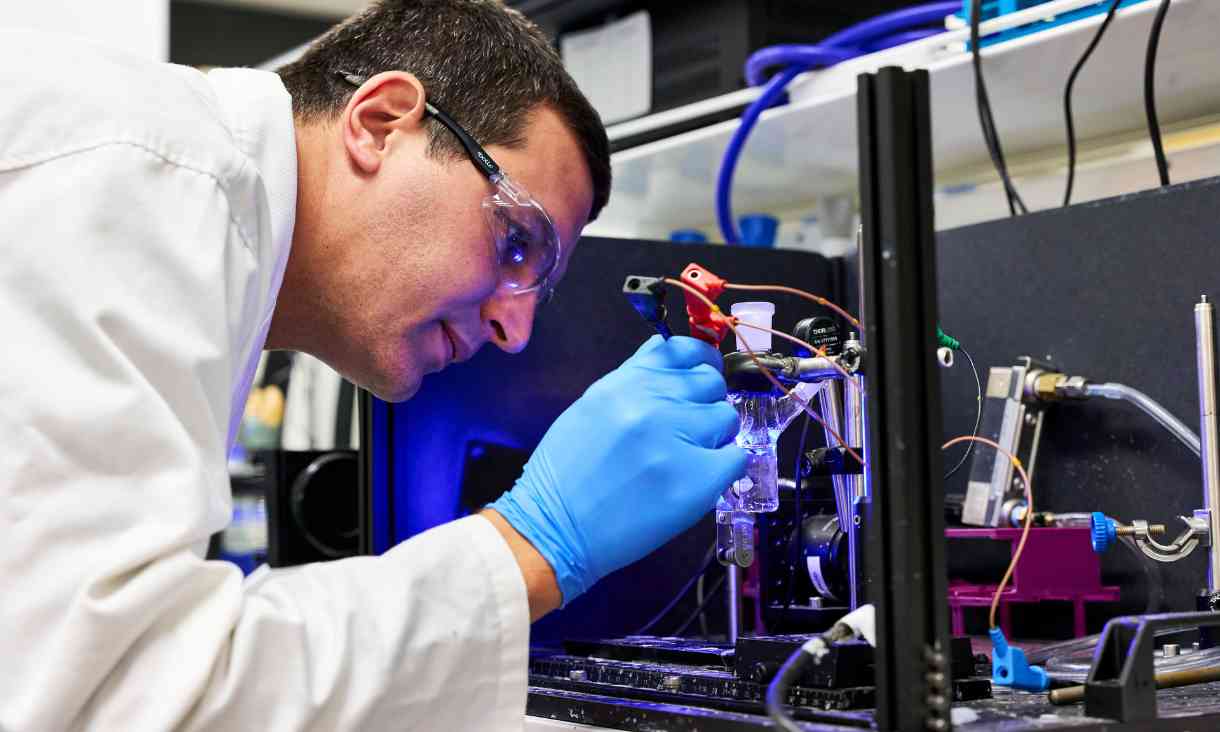

RMIT cuts ribbon on world-class nanomanufacturing research centre

RMIT University has launched the Centre for Atomaterials and Nanomanufacturing (CAN), which will pioneer atomaterial research translation to drive commercial growth for Australia

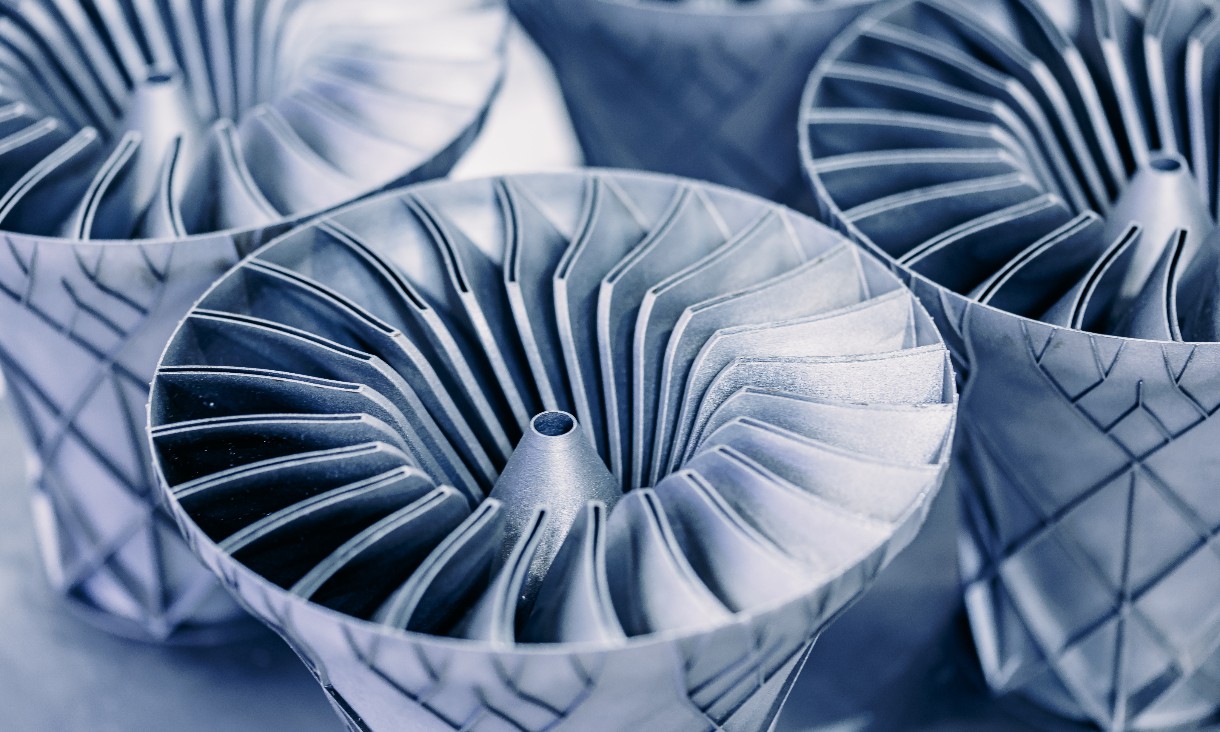

Enabling circular economy practices in sustainable metal production

Researchers in the DIAMETER project will develop digital platforms focused on augmented sustainability and circularity of additive manufacturing and machining processes.

Confinement may affect how we smell and feel about food

New research from RMIT University found confined and isolating environments changed the way people smelled and responded emotionally to certain food aromas.