CHAI Kicks Off 2026 with Inaugural Strategy Workshop

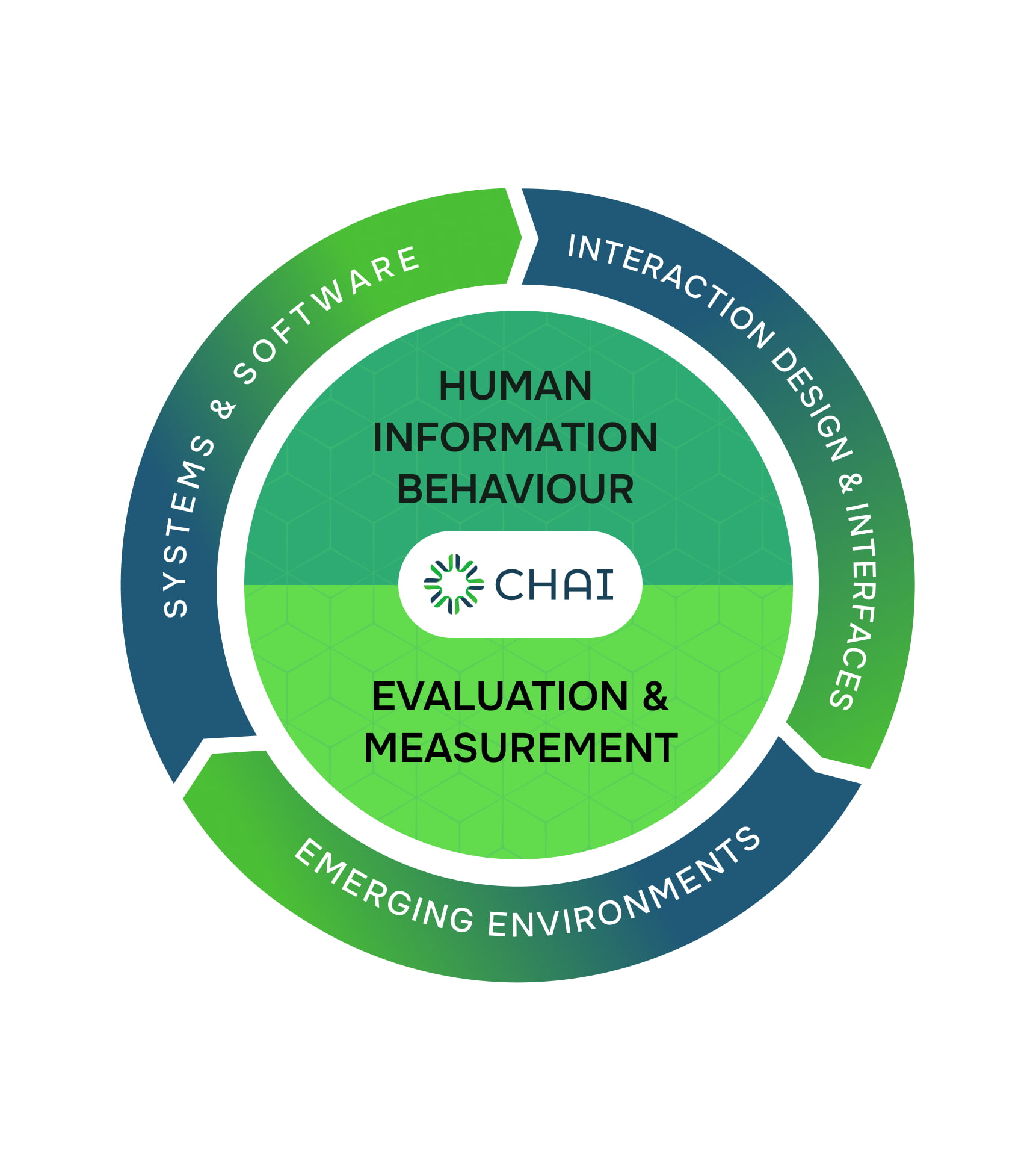

The Centre for Human-AI Innovation (CHAI) has launched 2026 with strong momentum, hosting its inaugural research strategy workshop at Storey Hall, RMIT University. The highly productive session brought together members from across disciplines to shape CHAI’s research priorities for the next three years. Discussions focused on identifying emerging themes in the human–AI landscape and exploring how deeper interdisciplinary collaboration can amplify research impact. The workshop also featured valuable perspectives from invited speakers Julie Stevens, Javier Grávalos Moreno, and Angel Calderon, whose contributions helped ground conversations in opportunity, global connection, and long-term direction. With strong engagement, thoughtful dialogue, and shared ambition across the group, the event marked an energising first step in defining CHAI’s next phase of research leadership.

The AI Con, RMIT Storey Hall: 1 July 2025

Co-funded by the Academy of the Social Sciences in Australia (ASSA) & RMIT’s Social Change Enabling Impact Platforms (EIP) with generous support from Readings Bookshop Professor Bender delivered a captivating and accessible primer on how chatbots really work, pulling back the curtain on the linguistic sleight of hand that drives today’s so-called “AI revolution.” With clarity, humour, and deep expertise, she helped the audience see beyond the hype, showing how language and meaning operate in ways machines can only mimic. Following the talk, Dr Kobi Leins joined Professor Bender for a dynamic and thought-provoking conversation around Bender’s latest book, The AI Con: How to Fight Big Tech’s Hype and Create the Future We Want. Together, they explored how AI hype fuels misinformation, masks power imbalances, and shapes our collective future—and, importantly, how we can all play a role in resisting it. The discussion sparked lively questions and reflections from the audience, leaving everyone inspired to think more critically about the technologies shaping our information environment. It was an evening that perfectly captured the spirit of thoughtful, interdisciplinary engagement that defines our community.